Fine-tuning(微调)能带来什么好处?主要有以下4点:

1. 更高质量的结果;相对于提示prompt来说;

2. 能力更强;允许训练更多的示例,远远超出了prompt的能力;

3. 减少令牌(token)使用,因为可以使用更短的提示;

4. 请求延迟的降低。

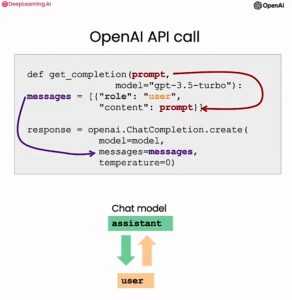

以下我们在Jupyter Notebook环境下,以gpt-3.5-turbo的模型为例,做下实验。

GPT模型已经在大量文本上进行了预训练。为了有效地使用这些模型,我们通常在提示(prompt)中包括指令及多个示例。给模型一些例子来展示如何执行任务通常被称为”少样本学习”(few-shot learning)。

通过提供比prompt更多例子的训练,微调可以改进少样本学习(few-shot learning),以达到更强大的能力。一旦模型经过微调,您就不需要在提示中提供太多示例。这可以节省成本并实现更低的延迟请求。

微调的步骤:

- 准备并上传训练数据。

- 训练一个新的微调模型。

- 使用您的微调模型。

一、场景介绍

我们要做一个线上回复养宠问题的聊天机器人。养宠问题分为二类:一类是通用性养宠的问题;一类是轻问诊。关于这两类问题,大模型的回复十分笼统,且输出不太规范。因此,我们基于已开展的宠物咨询和诊疗业务,通过兽医校对,整理了很多问答对。寄希望于对大模型进行微调,以给到用户更具体的回复。同时,我们将提示工程里的输出和风格要求也在训练数据中,以节省每次调用成本。

import openai

api_key="your key"

二、数据转换

openai的训练数据要以JSONL的格式上传。

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"}, {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "How far is the Moon from Earth?"}, {"role": "assistant", "content": "Around 384,400 kilometers. Give or take a few, like that really matters."}]}

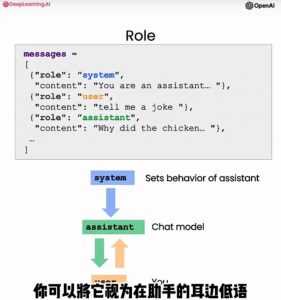

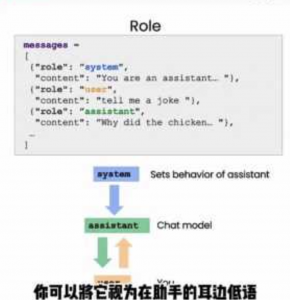

每一个Message,由三个role组成:system user、assistant。system提供高级指令,告诉大模型要扮演什么角色;user提出查询或提示,如:猫猫太肥了怎么办?;assistant是大模型的回复。请见下图分解。

下面的代码是用于做训练数据转换的。原数据文件:training_data.JSON

[

{

"Questions": "我家猫太胖了怎么减肥呢?",

"Replies": "原因分析: 摸着肋部,感觉以肉为主,但稍微用力也可以感受到肋骨,那就理想体重。\n标准放宽一点的话,肋骨部位上的肉再多点,少少接近超重也ok,但要严重超标的体重得好好控制一下,而且要立刻行动.\n推荐方案:足够的热量差:高蛋白 蛋白质对肌肉组织最关键,而肌肉又是基础新陈代谢的一大源头;再加上蛋白质热量不大,饱腹感更强,猫对蛋白质的需求还高得“变态”,比人高3-6倍。要减肥,就给猫吃蛋白质足够高的主食。 定时,定量 猫习惯昼伏夜出,进食时间集中清晨、黄昏、后半夜,我们可以将食物分成4份:\n早上:你出门前给猫吃的一份食\n晚上:你回家后给猫吃的那餐\n睡前:你睡觉前给猫再吃一餐\n零食:主食里留一部分出来,猫讨饭时糊弄猫 饱腹感 肉类的胃排空速度似乎慢于猫粮,纤维素也可以增加一些饱腹感,如果猫实在忍不住饿,把食物中一部分改为主食冻干/罐头或者干脆:给化毛片。化毛片富含纤维,热量也不算大,这里其实就是骗饱腹感用的。"

},

{

"Questions": "我家猫吃塑料,毛线是怎么回事?",

"Replies": "原因分析: 猫“异食癖”的定义有待商榷,如果你的猫不会把异物吃下肚,不算异食癖。小猫吃猫砂可能是气味影响或者食物认知未形成导致的。\n为了获取注意力、单纯无聊、环境压力、祈求食物或者部分猫的“基因”才“异食癖”的主要原因。\n丰富食物类型,增加食物喂食频率,与猫高强度互动都是改善异食癖的潜在有效办法。\n很遗憾,最有用的还是把杂物收起来!!!\n.\n推荐方案:控制压力来源:\n最近有陌生人?动物?还是处于新环境?亦或者有噪音?如果有条件,给猫一个相对安静的环境和躲藏处,与近期的潜在干扰因素隔离开。看情况可以放零食和加餐,可能肚子饿、嘴巴馋也算压力。 避免接触异食对象:让猫咪无法接触异食对象,同时留意猫咪是否有营养不良、微量元素缺乏或其他疾病问题。\n一般来说,危险度排名如下:百合科植物>>尼龙线、鞋带等线状异物>>>猫砂>>小块塑料片。百合科植物很容易造成猫肾脏损伤,而强韧的线状异物有几率造成肠叠套、撕裂伤等,是需要手术处理的。"

}

]

转换成以下格式,并存于数据文件:training_dataR1.JSONL

{"messages": [{"role": "system", "content": "你是一位宠物喂养和健康专家,你回答用户提出的问题。回答方式分为两个自然段,分别阐述原因分析和推荐方案。尽量采用我给你的训练数据."}, {"role": "user", "content": "我家猫太胖了怎么减肥呢?"}, {"role": "assistant", "content": "原因分析: 摸着肋部,感觉以肉为主,但稍微用力也可以感受到肋骨,那就理想体重。\n标准放宽一点的话,肋骨部位上的肉再多点,少少接近超重也ok,但要严重超标的体重得好好控制一下,而且要立刻行动.\n推荐方案:足够的热量差:高蛋白 蛋白质对肌肉组织最关键,而肌肉又是基础新陈代谢的一大源头;再加上蛋白质热量不大,饱腹感更强,猫对蛋白质的需求还高得“变态”,比人高3-6倍。要减肥,就给猫吃蛋白质足够高的主食。 定时,定量 猫习惯昼伏夜出,进食时间集中清晨、黄昏、后半夜,我们可以将食物分成4份:\n早上:你出门前给猫吃的一份食\n晚上:你回家后给猫吃的那餐\n睡前:你睡觉前给猫再吃一餐\n零食:主食里留一部分出来,猫讨饭时糊弄猫 饱腹感 肉类的胃排空速度似乎慢于猫粮,纤维素也可以增加一些饱腹感,如果猫实在忍不住饿,把食物中一部分改为主食冻干/罐头或者干脆:给化毛片。化毛片富含纤维,热量也不算大,这里其实就是骗饱腹感用的。"}]}

{"messages": [{"role": "system", "content": "你是一位宠物喂养和健康专家,你回答用户提出的问题。回答方式分为两个自然段,分别阐述原因分析和推荐方案。尽量采用我给你的训练数据."}, {"role": "user", "content": "我家猫吃塑料,毛线是怎么回事?"}, {"role": "assistant", "content": "原因分析: 猫“异食癖”的定义有待商榷,如果你的猫不会把异物吃下肚,不算异食癖。小猫吃猫砂可能是气味影响或者食物认知未形成导致的。\n为了获取注意力、单纯无聊、环境压力、祈求食物或者部分猫的“基因”才“异食癖”的主要原因。\n丰富食物类型,增加食物喂食频率,与猫高强度互动都是改善异食癖的潜在有效办法。\n很遗憾,最有用的还是把杂物收起来!!!\n.\n推荐方案:控制压力来源:\n最近有陌生人?动物?还是处于新环境?亦或者有噪音?如果有条件,给猫一个相对安静的环境和躲藏处,与近期的潜在干扰因素隔离开。看情况可以放零食和加餐,可能肚子饿、嘴巴馋也算压力。 避免接触异食对象:让猫咪无法接触异食对象,同时留意猫咪是否有营养不良、微量元素缺乏或其他疾病问题。\n一般来说,危险度排名如下:百合科植物>>尼龙线、鞋带等线状异物>>>猫砂>>小块塑料片。百合科植物很容易造成猫肾脏损伤,而强韧的线状异物有几率造成肠叠套、撕裂伤等,是需要手术处理的。"}]}

转换的代码如下:

# 引入相应的包

import json

import tiktoken # for token counting

import numpy as np

from collections import defaultdict

# 读原数据文件 Read the original data from 'training_data.JSON'

with open('training_data.JSON', 'r', encoding='utf-8') as file:

original_data = json.load(file)

# 转化成目标格式 Convert the original data to the new format and save it

converted_data = []

for item in original_data:

system_message = {

"role": "system",

"content": "你是一位宠物喂养和健康专家,你回答用户提出的问题。回答方式分为两个自然段,分别阐述原因分析和推荐方案。尽量采用我给你的训练数据."

}

user_message = {

"role": "user",

"content": item["Questions"]

}

assistant_message = {

"role": "assistant",

"content": item["Replies"]

}

messages = [system_message, user_message, assistant_message]

converted_data.append({"messages": messages})

# 保存成目标文件

with open('training_dataR1.JSONL', 'w', encoding='utf-8') as output_file:

for data in converted_data:

json.dump(data, output_file, ensure_ascii=False)

output_file.write('\n')

在当前路径,就已经有training_dataR1.JSONL文件。

我们来验证一下该文件,是否有59个训练数据,并显示第1个。

data_path = "training_dataR1.JSONL"

# Load the dataset

with open(data_path, 'r', encoding='utf-8') as f:

dataset = [json.loads(line) for line in f]

# Initial dataset stats

print("Num examples:", len(dataset))

print("First example:")

for message in dataset[0]["messages"]:

print(message)

Num examples: 59

First example:

{'role': 'system', 'content': '你是一位宠物喂养和健康专家,你回答用户提出的问题。回答方式分为两个自然段,分别阐述原因分析和推荐方案。尽量采用我给你的训练数据.'}

{'role': 'user', 'content': '我家猫太胖了怎么减肥呢?'}

{'role': 'assistant', 'content': '原因分析: 摸着肋部,感觉以肉为主,但稍微用力也可以感受到肋骨,那就理想体重。\n标准放宽一点的话,肋骨部位上的肉再多点,少少接近超重也ok,但要严重超标的体重得好好控制一下,而且要立刻行动.\\n推荐方案:足够的热量差:高蛋白 蛋白质对肌肉组织最关键,而肌肉又是基础新陈代谢的一大源头;再加上蛋白质热量不大,饱腹感更强,猫对蛋白质的需求还高得“变态”,比人高3-6倍。要减肥,就给猫吃蛋白质足够高的主食。 定时,定量 猫习惯昼伏夜出,进食时间集中清晨、黄昏、后半夜,我们可以将食物分成4份:\n早上:你出门前给猫吃的一份食\n晚上:你回家后给猫吃的那餐\n睡前:你睡觉前给猫再吃一餐\n零食:主食里留一部分出来,猫讨饭时糊弄猫 饱腹感 肉类的胃排空速度似乎慢于猫粮,纤维素也可以增加一些饱腹感,如果猫实在忍不住饿,把食物中一部分改为主食冻干/罐头或者干脆:给化毛片。化毛片富含纤维,热量也不算大,这里其实就是骗饱腹感用的。'}

我们对数据进行校验。如果有错误会显示出来。

# Format error checks

format_errors = defaultdict(int)

for ex in dataset:

if not isinstance(ex, dict):

format_errors["data_type"] += 1

continue

messages = ex.get("messages", None)

if not messages:

format_errors["missing_messages_list"] += 1

continue

for message in messages:

if "role" not in message or "content" not in message:

format_errors["message_missing_key"] += 1

if any(k not in ("role", "content", "name", "function_call") for k in message):

format_errors["message_unrecognized_key"] += 1

if message.get("role", None) not in ("system", "user", "assistant", "function"):

format_errors["unrecognized_role"] += 1

content = message.get("content", None)

function_call = message.get("function_call", None)

if (not content and not function_call) or not isinstance(content, str):

format_errors["missing_content"] += 1

if not any(message.get("role", None) == "assistant" for message in messages):

format_errors["example_missing_assistant_message"] += 1

if format_errors:

print("Found errors:")

for k, v in format_errors.items():

print(f"{k}: {v}")

else:

print("No errors found")

No errors found

我们来计算一下训练数据占了多少token。

encoding = tiktoken.get_encoding("cl100k_base")

# not exact!

# simplified from https://github.com/openai/openai-cookbook/blob/main/examples/How_to_count_tokens_with_tiktoken.ipynb

def num_tokens_from_messages(messages, tokens_per_message=3, tokens_per_name=1):

num_tokens = 0

for message in messages:

num_tokens += tokens_per_message

for key, value in message.items():

num_tokens += len(encoding.encode(value))

if key == "name":

num_tokens += tokens_per_name

num_tokens += 3

return num_tokens

def num_assistant_tokens_from_messages(messages):

num_tokens = 0

for message in messages:

if message["role"] == "assistant":

num_tokens += len(encoding.encode(message["content"]))

return num_tokens

def print_distribution(values, name):

print(f"\n#### Distribution of {name}:")

print(f"min / max: {min(values)}, {max(values)}")

print(f"mean / median: {np.mean(values)}, {np.median(values)}")

print(f"p5 / p95: {np.quantile(values, 0.1)}, {np.quantile(values, 0.9)}")

# Warnings and tokens counts

n_missing_system = 0

n_missing_user = 0

n_messages = []

convo_lens = []

assistant_message_lens = []

for ex in dataset:

messages = ex["messages"]

if not any(message["role"] == "system" for message in messages):

n_missing_system += 1

if not any(message["role"] == "user" for message in messages):

n_missing_user += 1

n_messages.append(len(messages))

convo_lens.append(num_tokens_from_messages(messages))

assistant_message_lens.append(num_assistant_tokens_from_messages(messages))

print("Num examples missing system message:", n_missing_system)

print("Num examples missing user message:", n_missing_user)

print_distribution(n_messages, "num_messages_per_example")

print_distribution(convo_lens, "num_total_tokens_per_example")

print_distribution(assistant_message_lens, "num_assistant_tokens_per_example")

n_too_long = sum(l > 4096 for l in convo_lens)

print(f"\n{n_too_long} examples may be over the 4096 token limit, they will be truncated during fine-tuning")

Num examples missing system message: 0

Num examples missing user message: 0

#### Distribution of num_messages_per_example:

min / max: 3, 3

mean / median: 3.0, 3.0

p5 / p95: 3.0, 3.0

#### Distribution of num_total_tokens_per_example:

min / max: 172, 1600

mean / median: 599.271186440678, 510.0

p5 / p95: 236.8, 1024.6000000000001

#### Distribution of num_assistant_tokens_per_example:

min / max: 75, 1420

mean / median: 473.23728813559325, 389.0

p5 / p95: 134.8, 876.2000000000003

0 examples may be over the 4096 token limit, they will be truncated during fine-tuning

基于上述的训练数据和token,大概要花多少费用?

MAX_TOKENS_PER_EXAMPLE = 4096

TARGET_EPOCHS = 3

MIN_TARGET_EXAMPLES = 100

MAX_TARGET_EXAMPLES = 25000

MIN_DEFAULT_EPOCHS = 1

MAX_DEFAULT_EPOCHS = 25

n_epochs = TARGET_EPOCHS

n_train_examples = len(dataset)

if n_train_examples * TARGET_EPOCHS MAX_TARGET_EXAMPLES:

n_epochs = max(MIN_DEFAULT_EPOCHS, MAX_TARGET_EXAMPLES // n_train_examples)

n_billing_tokens_in_dataset = sum(min(MAX_TOKENS_PER_EXAMPLE, length) for length in convo_lens)

print(f"Dataset has ~{n_billing_tokens_in_dataset} tokens that will be charged for during training")

print(f"By default, you'll train for {n_epochs} epochs on this dataset")

print(f"By default, you'll be charged for ~{n_epochs * n_billing_tokens_in_dataset} tokens")

Dataset has ~35357 tokens that will be charged for during training

By default, you'll train for 3 epochs on this dataset

By default, you'll be charged for ~106071 tokens

三、训练数据文件上传

from openai import OpenAI

client = OpenAI(api_key=api_key)

client.files.create(

file=open(data_path, "rb"),

purpose="fine-tune"

)

FileObject(id='file-htiLNH6OfDuVYuXnjgP3p7xA', bytes=80068, created_at=1699431789, filename='training_dataR1.JSONL', object='file', purpose='fine-tune', status='processed', status_details=None)

四、训练一个新的模型

用上面的文件ID,基于gpt-3.5-turbo,我们训练一个模型。以下是新建微调任务:

client.fine_tuning.jobs.create(

training_file="file-htiLNH6OfDuVYuXnjgP3p7xA",

model="gpt-3.5-turbo"

)

FineTuningJob(id='ftjob-FbfRd2U7BTAkggos5AO12jWl', created_at=1699431936, error=None, fine_tuned_model=None, finished_at=None, hyperparameters=Hyperparameters(n_epochs='auto', batch_size='auto', learning_rate_multiplier='auto'), model='gpt-3.5-turbo-0613', object='fine_tuning.job', organization_id='org-JQ3nn62Nnde4uERLZHxM0Kql', result_files=[], status='validating_files', trained_tokens=None, training_file='file-htiLNH6OfDuVYuXnjgP3p7xA', validation_file=None)

从现在开始,我们提交的任务正在排队。以下是关于微调任务常见的操作。如查看任务队列、中断、删除等。

from openai import OpenAI

client = OpenAI(api_key=api_key)

# List 10 fine-tuning jobs

client.fine_tuning.jobs.list(limit=10)

# Retrieve the state of a fine-tune

# client.fine_tuning.jobs.retrieve("ftjob-FbfRd2U7BTAkggos5AO12jWl")

# Cancel a job

# client.fine_tuning.jobs.cancel("ftjob-FbfRd2U7BTAkggos5AO12jWl")

# List up to 10 events from a fine-tuning job

# client.fine_tuning.jobs.list_events(id="ftjob-FbfRd2U7BTAkggos5AO12jWl", limit=10)

# Delete a fine-tuned model (must be an owner of the org the model was created in)

# client.models.delete("ft:gpt-3.5-turbo:acemeco:suffix:abc123")

SyncCursorPage[FineTuningJob](data=[FineTuningJob(id='ftjob-FbfRd2U7BTAkggos5AO12jWl', created_at=1699431936, error=None, fine_tuned_model='ft:gpt-3.5-turbo-0613:personal::8IavFxZw', finished_at=1699442524, hyperparameters=Hyperparameters(n_epochs=3, batch_size=1, learning_rate_multiplier=2), model='gpt-3.5-turbo-0613', object='fine_tuning.job', organization_id='org-JQ3nn62Nnde4uERLZHxM0Kql', result_files=['file-YDwJTKIv8TRi7MoTtw2LZWHD'], status='succeeded', trained_tokens=105717, training_file='file-htiLNH6OfDuVYuXnjgP3p7xA', validation_file=None)], object='list', has_more=False)

我们用查找,client.fine_tuning.jobs.list(limit=10)。最近10个jobs,只有1个job,status正在排队(queued)。过了大概4~5个小时,再查询,将JobID传入retrieve(), status得到succeeded.

# Retrieve the state of a fine-tune

client.fine_tuning.jobs.retrieve("ftjob-FbfRd2U7BTAkggos5AO12jWl")

FineTuningJob(id='ftjob-FbfRd2U7BTAkggos5AO12jWl', created_at=1699431936, error=None, fine_tuned_model='ft:gpt-3.5-turbo-0613:personal::8IavFxZw', finished_at=1699442524, hyperparameters=Hyperparameters(n_epochs=3, batch_size=1, learning_rate_multiplier=2), model='gpt-3.5-turbo-0613', object='fine_tuning.job', organization_id='org-JQ3nn62Nnde4uERLZHxM0Kql', result_files=['file-YDwJTKIv8TRi7MoTtw2LZWHD'], status='succeeded', trained_tokens=105717, training_file='file-htiLNH6OfDuVYuXnjgP3p7xA', validation_file=None)

微调后的模型名叫:ft:gpt-3.5-turbo-0613:personal::8IavFxZw

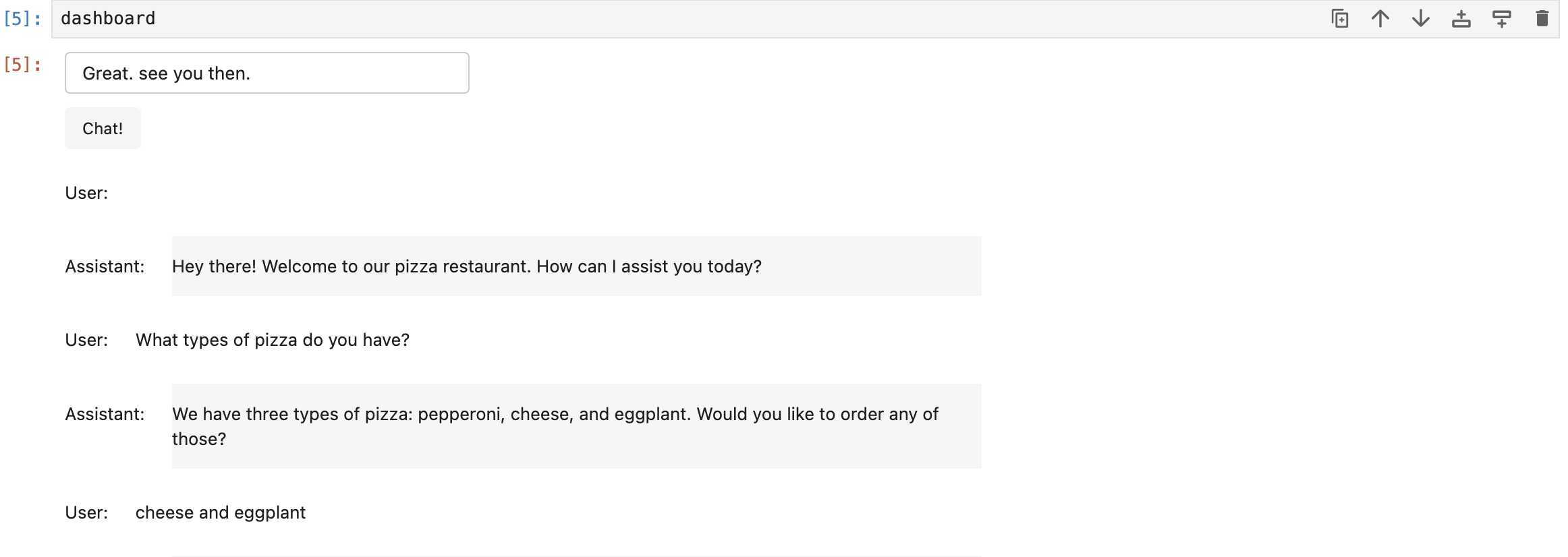

五、调用新模型

用新训练出来的模型:ft:gpt-3.5-turbo-0613:personal::8IavFxZw

from openai import OpenAI

client = OpenAI(api_key=api_key)

prompt = "我家猫太胖了怎么减肥呢?"

messages=[{"role": "user", "content": prompt}]

response = client.chat.completions.create(

model="ft:gpt-3.5-turbo-0613:personal::8IavFxZw",

messages=messages,

temperature = 0

)

print("fine-tune: " + response.choices[0].message.content) ## refer to latest openai package

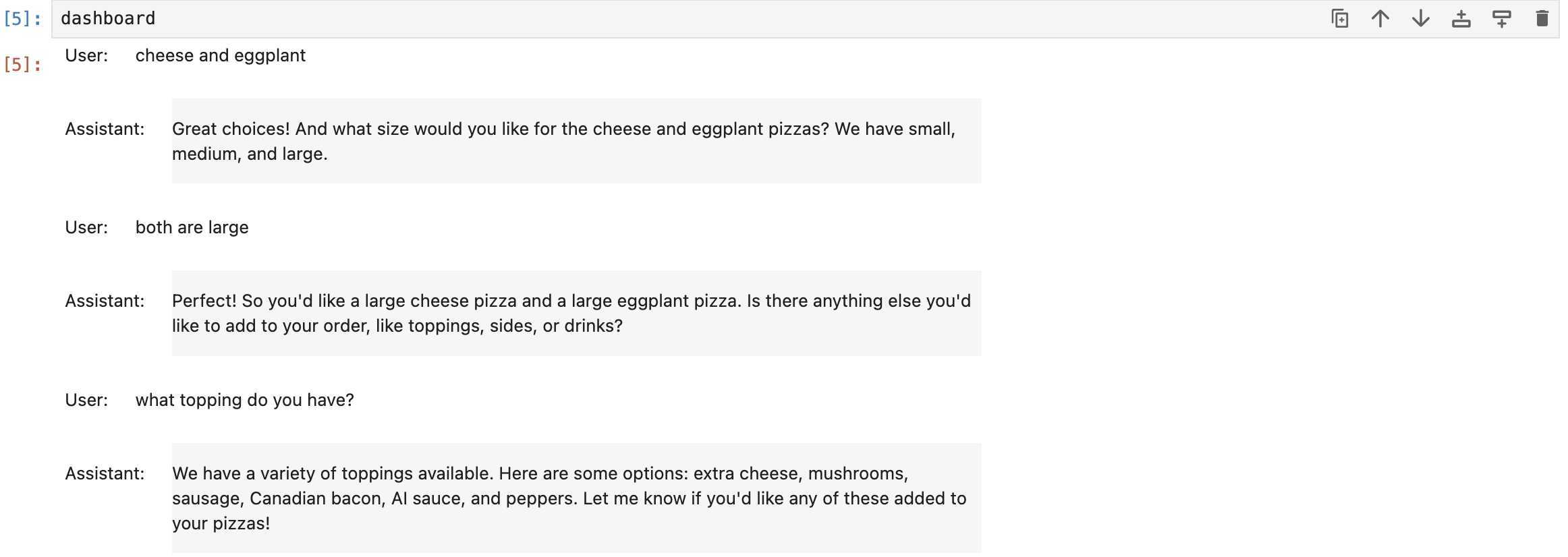

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=messages,

temperature = 0

)

print("GPT-3.5-turbo: " + response.choices[0].message.content) ## refer to latest openai package

fine-tune: 减肥的关键是控制猫咪的饮食和增加运动量。具体措施如下:

1. 控制饮食:每天定时定量喂食,不要随便喂食零食和人食,尽量选择低脂肪、低热量的猫粮。

2. 增加运动:每天陪猫咪玩耍,增加运动量,可以使用一些猫咪喜欢的玩具,让猫咪多动起来。

3. 定期体重:每周定期称一次猫咪的体重,记录下来,以便及时调整饮食和运动量。

4. 逐渐减少饮食:如果猫咪的体重一直没有下降,可以逐渐减少猫咪的饮食量,但是不要一下子减少太多,以免影响猫咪的健康。

GPT-3.5-turbo: 如果你的猫太胖了,有几种方法可以帮助它减肥:

1. 控制饮食:确保你的猫只摄入适量的食物。与兽医咨询后,可以选择低热量的猫粮,并按照建议的份量喂食。避免给予过多的零食或人类食物。

2. 分次喂食:将每日的食物分成几次喂给猫,而不是一次性喂给它。这样可以帮助控制猫的食欲,减少过量进食的可能性。

3. 增加运动:鼓励猫进行更多的运动,例如提供玩具、攀爬架和激发其活动性的游戏。每天与猫互动并提供适当的运动,有助于消耗多余的能量。

4. 定期体重监测:定期称量猫的体重,以确保减肥计划的有效性。如果体重没有减少,可能需要调整饮食或增加运动量。

5. 寻求兽医建议:如果你的猫的体重问题严重或你不确定如何正确减肥,最好咨询兽医。他们可以为你的猫制定一个适合其健康状况和需求的减肥计划。

以上是两个模型的输出。

上面的例子,仅仅只是微调gpt-3.5-turbor的过程。如果要使微调出的模型有更好的表现,还有大量的工作要做。